|

|

市場調査レポート

商品コード

1744404

エンドツーエンド(E2E)自動運転(2025年)End-to-End Autonomous Driving Research Report, 2025 |

||||||

|

|||||||

| エンドツーエンド(E2E)自動運転(2025年) |

|

出版日: 2025年05月06日

発行: ResearchInChina

ページ情報: 英文 500 Pages

納期: 即日から翌営業日

|

全表示

- 概要

- 目次

エンドツーエンド自動運転の本質は、大規模で高品質な人間の運転データを通じて運転行動を模倣することにあります。技術的な観点からは、模倣学習に基づくアプローチは人間レベルの運転性能に近づくことはできますが、人間の認知限界を超えることは難しいです。さらに、運転データセットには高品質なシナリオデータが乏しく、データ品質も不均一であるため、エンドツーエンドソリューションが人間レベルの能力に到達するのは極めて困難です。これらのシステムは通常、トレーニングに数百万もの高品質な運転クリップを必要とするため、スケーラビリティの閾値が高いことが、進歩をさらに複雑にしています。

2025年初頭にDeepSeek-R1モデルが業界で話題となった後、その革新的な強化学習(RL)のみの技術的経路が独自の利点を実証しました。このアプローチは、最小限の高品質データでコールドスタートアップを達成し、多段階のRLトレーニングメカニズムを採用することで、大規模モデルのトレーニングにおけるデータ規模への依存を効果的に低減します。この「スケーリング法則」の拡張により、モデルの継続的な拡張が可能になります。RLの革新は、エンドツーエンド自動運転にも転用でき、環境認識、進路計画、意思決定をより高い精度で強化することができます。これは、より大規模でより有能なインテリジェントモデルを構築するための基礎を築くものです。

重要なことは、RLフレームワークが対話的環境において自律的に推論チェーンを生成することに優れており、大規模モデルが思考連鎖(CoT)能力を開発することを可能にすることです。これにより、論理的な推論の効率が大幅に向上し、人間の認知的制約を超える可能性さえ引き出すことができます。ワールドモデルによって生成されたシミュレーション環境と相互作用することで、エンドツーエンド自動運転モデルは現実世界の物理的ルールをより深く理解できるようになります。このRL主導の技術的道筋は、アルゴリズム開発への新しいアプローチを提供し、従来の模倣学習の限界を打ち破ることを約束します。

I VLAパラダイムへのエンドツーエンドモデルの移行

エンドツーエンドモデルは、ニューラルネットワークを介して視覚入力を駆動軌道出力に直接マッピングします。しかし、物理世界の力学を本質的に理解していないため、これらのモデルは明示的な意味理解や論理的推論なしに動作します。言葉による命令、交通ルール、文字情報を解釈することができません。さらに、3次元空間知覚が限られているため、ロングテールのシナリオでは汎化が制限されます。

VLA(Visual-Language-Action)パラダイムは、LLM(Large Language Models)をアーキテクチャに統合することで、決定的な改良をもたらします。これにより、元のシングルモダリティ視覚行動システムは、視覚、言語、行動を組み合わせたマルチモーダルフレームワークに変換されます。LLMを組み込むことで、人間のような常識と論理的推論が自動運転システムに注入され、データドリブンの「弱いAI」から、インテリジェンスドリブンの「ジェネラリストシステム」へと移行します。

II VLAモデルの学習過程と強化学習の応用

大規模言語モデル(LLM)の事後学習では、強化学習(RL)がますます普及しています。例えば、今年の目玉モデルであるDeepSeek-R1は、RLを中心的な学習方法として活用しています。適切な報酬メカニズムを設計することで、RLは基盤モデルの推論能力を効果的に活性化させました。当初は言語モデルで実証されたこの技術的優位性は、現在では自動運転業界でも注目されており、複数のメーカーがADAS技術にRLを組み込んでいます。

VLAモデルのトレーニングは2段階に分けられます。「ベースモデルの事前学習」と「ドメインの微調整」です。事前学習段階では、文脈の理解、論理的推論など、膨大なデータを通じてモデルに一般的な認知能力を与えます。インテリジェントドライビング分野の微調整段階では、教師あり学習によって基本的な運転ルール(車線維持や障害物認識など)を確立し、強化学習(RL)の助けを借りて重要なアップグレードを完了する必要があります。

当レポートでは、中国の自動車産業について調査分析し、エンドツーエンド(E2E)自動運転の技術ロードマップと開発動向、国内外のサプライヤーなどの情報を提供しています。

目次

第1章 エンドツーエンドインテリジェントドライビング技術の基礎

- エンドツーエンドインテリジェントドライビングの用語と概念

- エンドツーエンドインテリジェントドライビングのイントロダクションと現状

- 概要

- エンドツーエンドモデル実装方式

- エンドツーエンドモデル検証方式

- 代表的なエンドツーエンドインテリジェントドライビングの事例

- 基盤モデル

- 基盤モデルのイントロダクション

- 基盤モデル:大規模マルチモーダルモデル

- 基盤モデル:マルチモーダル大規模言語モデル

- VLM・VLA

- 世界モデル

- E2E-AD動作計画モデルの比較

- VLAモデル

- 拡散モデル

- ELM(Embodied Language Model)

第2章 E2E-ADの技術ロードマップと開発動向

- エンドツーエンドインテリジェントドライビングの技術動向

- エンドツーエンドインテリジェントドライビングの市場動向

- エンドツーエンドインテリジェントドライビングチーム構築

第3章 エンドツーエンドインテリジェントドライビングサプライヤー

- MOMENTA

- DeepRoute.ai

- Huawei

- Horizon Robotics

- Zhuoyu Technology

- NVIDIA

- Bosch

- Baidu

- SenseAuto

- QCraft

- Wayve

- Waymo

- GigaAI

- LightWheel AI

- PhiGent Robotics

- Nullmax

- Mobileye

- Motovis

第4章 OEMのエンドツーエンドインテリジェントドライビングのレイアウト

- Xpengのエンドツーエンドインテリジェントドライビングのレイアウト

- Li Autoのエンドツーエンドインテリジェントドライビングのレイアウト

- Teslaのエンドツーエンドインテリジェントドライビングのレイアウト

- Zeronのエンドツーエンドインテリジェントドライビングのレイアウト

- Geely & ZEEKRのエンドツーエンドインテリジェントドライビングのレイアウト

- Xiaomi Autoのエンドツーエンドインテリジェントドライビングのレイアウト

- NIOのエンドツーエンドインテリジェントドライビングのレイアウト

- Changan Automobileのエンドツーエンドインテリジェントドライビングのレイアウト

- Mercedes-Benzのエンドツーエンドインテリジェントドライビングのレイアウト

- Cheryのエンドツーエンドインテリジェントドライビングのレイアウト

- GAC

- Leapmotor

- IM Motors

- Hongqi

End-to-End Autonomous Driving Research: E2E Evolution towards the VLA Paradigm via Synergy of Reinforcement Learning and World Models

The essence of end-to-end autonomous driving lies in mimicking driving behaviors through large-scale, high-quality human driving data. From a technical perspective, while imitation learning-based approaches can approach human-level driving performance, they struggle to transcend human cognitive limits. Additionally, the scarcity of high-quality scenario data and uneven data quality in driving datasets make it extremely challenging for end-to-end solutions to reach human-level capabilities. The high scalability threshold further complicates progress, as these systems typically require millions of high-quality driving clips for training.

Following the industry buzz around the DeepSeek-R1 model in early 2025, its innovative reinforcement learning (RL)-only technical path demonstrated unique advantages. This approach achieves cold startup with minimal high-quality data and employs a multi-stage RL training mechanism, effectively reducing dependency on data scale for large model training. This extension of the "scaling laws" enables continuous model expansion. Innovations in RL can also be transferred to end-to-end autonomous driving, enhancing environmental perception, path planning, and decision-making with greater precision. This lays the foundation for building larger, more capable intelligent models.

Crucially, RL frameworks excel at autonomously generating reasoning chains in interactive environments, enabling large models to develop Chain-of-Thought (CoT) capabilities. This significantly improves logical reasoning efficiency and even unlocks potential beyond human cognitive constraints. By interacting with simulation environments generated by world models, end-to-end autonomous driving models gain deeper understanding of real-world physical rules. This RL-driven technical path offers a novel approach to algorithm development, promising to break traditional imitation learning limitations.

I Transition of End-to-End Models towards the VLA Paradigm

End-to-end models directly map visual inputs to driving trajectory outputs via neural networks. However, lacking intrinsic understanding of physical world dynamics, these models operate without explicit semantic comprehension or logical reasoning. They fail to interpret verbal commands, traffic rules, or textual information. Furthermore, their limited 3D spatial perception restricts generalization in long-tail scenarios.

The Visual-Language-Action (VLA) paradigm introduces critical improvements by integrating Large Language Models (LLMs) into the architecture. This transforms the original single-modality vision-action system into a multimodal framework combining vision, language, and action. The inclusion of LLMs injects human-like common sense and logical reasoning into autonomous driving systems, transitioning from data-driven "weak AI" to cognitive intelligence-driven "generalist systems."

VLA Input: Signals received from cameras, navigation systems, maps, and other devices. These signals are processed by two encoders:

Vision Encoder: Encodes image data to extract high-level features of the road environment.

Text Encoder: Processes text information generated from human-vehicle interactions, such as voice commands or parameter settings.

VLA Output:

Trajectory Decoder: Converts model-generated information into specific trajectory signals, outlining the vehicle's driving plan for the next 10 to 30 seconds, including speed control and route details.

Text Decoder: Simultaneously generates natural language explanations for the decisions. For example, when detecting a pedestrian crossing the road, the system not only plans a deceleration-and-stop trajectory but also outputs a textual explanation like "Pedestrian crossing detected; slowing down and stopping." This ensures transparency in decision-making.

Core Breakthroughs of VLA

World Model Construction: VLA extracts rich environmental information from sensor data, leverages language models to interpret human instructions, and generates explainable decision-making processes. It then translates multimodal inputs into actionable driving commands.

Chain-of-Thought (CoT) Reasoning: VLA autonomously generates reasoning chains in interactive environments, enabling logical inference capabilities that surpass human cognitive limits. With the support of large models, VLA enhances visual and spatial understanding beyond traditional end-to-end approaches.

By 2030, VLA-centric end-to-end solutions are projected to dominate over 50% of L3/L4 autonomous driving markets, reshaping the value chain of traditional Tier-1 suppliers.

Li Auto's MindVLA Evolution Path

In 2025, Li Auto integrated End-to-End (E2E) and Visual Language Model (VLM) approaches into an advanced Vision-Language-Action (VLA) architecture. This shift addressed critical limitations in the previous dual-system framework:

Constraint 1: The dual-system pipeline processed inputs (cameras, LiDAR, vehicle pose, navigation) through a 3D encoder and directly output trajectories via an action decoder. However, its discriminative AI-based E2E model lacked generalization and common-sense reasoning, struggling with long-tail scenarios. While simple, it failed in complex spatial understanding and language interactions.

Constraint 2: System 2 (semantic assistant) supported System 1 (E2E) but suffered from technical shortcomings. Existing VLMs relied on 2D front-camera data, neglecting omnidirectional inputs from surround-view and rear cameras. Additionally, System 2 lacked robust 3D spatial understanding-a core requirement for trajectory planning.

Under the VLA architecture, V-Spatial Intelligence is responsible for 3D data processing and spatial understanding, equipped with a 3D word segmentation device; L-Linguistic Intelligence uses MindGPT large-scale language models to fuse spatial markers and process semantic information; and A-Action Policy integrates decisions through collective action generators to generate action trajectories. The MindVLA architecture enhances the tokenization of spatial information (3D Tokenizer), scene understanding of language models (MindGPT), and collective action generation capabilities (Collective Action Generator), enabling VLA to maintain strong spatial language reasoning capabilities while realizing the collective modeling and alignment of the characteristics of the three modes of vision, language, and action in a unified space, which is expected to solve the intelligent decision-making requirements in future complex scenarios.

II Training Process of VLA Models and Application of Reinforcement Learning

In post-training of Large Language Models (LLMs), reinforcement learning (RL) has become increasingly prevalent. For instance, DeepSeek-R1, a standout model this year, leveraged RL as a core training method. By designing appropriate reward mechanisms, RL effectively activated the foundational model's reasoning capabilities. This technical advantage, initially proven in language models, has now drawn attention in the autonomous driving industry, with multiple manufacturers integrating RL into their ADAS technologies.

The training of the VLA model is divided into two stages: "pre-training of the base model" and "domain fine-tuning". In the pre-training stage, the model is given general cognitive abilities through massive data, such as understanding context, logical reasoning, etc. In the fine-tuning stage of the intelligent driving field, basic driving rules (such as lane keeping and obstacle recognition) need to be established through supervised learning, and then key upgrades need to be completed with the help of reinforcement learning (RL). Reinforcement learning draws on the successful experience in natural language processing (such as RLHF alignment of human preferences), and optimizes decision-making in driving scenarios through the "open-loop + closed-loop" mechanism: the open-loop stage uses historical takeover data to calibrate safety logic, and the closed-loop stage simulates extreme working conditions through virtual scene generation technology (such as world model), allowing the model to actively trial and error and iterate strategies, breaking through the limitations of traditional end-to-end models relying on massive amounts of labeled data.

Imitation Learning (IL)

The scenario cloning strategy (BC) in imitation learning, the core of which is to formulate a strategy by learning driving trajectory of experts such as human drivers. In the field of intelligent driving, this method mainly relies on analyzing large amounts of driving data to imitate human driving behavior. Its advantage is that it is simple to implement and computationally efficient, but its shortcomings are also obvious - it is difficult to deal with unseen special scenarios or abnormal situations.

From the perspective of the training mechanism, the scenario cloning strategy adopts an open-loop approach and relies on the driving demonstration data of the distribution law. However, real driving is a typical closed-loop process, and the subtle deviations of each step may accumulate over time, forming compound errors and triggering unknown scenarios. As a result, the strategy trained by scenario cloning often performs poorly in unfamiliar situations, and its robustness has attracted industry attention.

The principle of reinforcement learning (RL) is to optimize action strategies through reward functions:

The reinforcement learning model continuously interacts in the simulated traffic scene and relies on the reward mechanism to adjust and optimize the driving strategy. This way, the model can learn more reasonable decisions in the complex and dynamic traffic environment. However, reinforcement learning has obvious shortcomings in practical applications: on the one hand, the training efficiency is not high, and a lot of trial and error is required to obtain the usable model; on the other hand, it cannot be trained directly in the real road environment - after all, the real driving scene cannot afford frequent trial and error, and the cost is too high. Most of the current simulation training is based on the sensor data generated by the game engine, and the real environment relies on the information of the object itself rather than the sensor input, resulting in the gap between the simulation results and the actual scene.

Another problem is the human behavior alignment: the reinforcement learning exploration process may cause the model strategy to deviate from human driving habits and act incoherently. To address this, imitation learning is often integrated as a regularization term during RL training, incorporating human driving data to align policies with human behavior.

Li Auto's MindVLA Training Methodology

Stage I: The training process of Li Auto's VLA model is divided into four stages: VL visual language base model pre-training, assisted driving post-training, assisted driving reinforcement learning, and driver agent construction. Among them, the pre-training of VL base model is the core link of the entire training system. In the early dual-system stage, Li Auto used Ali Tongyi Qianwen's Qwen-VL visual language model, but when developing the latest VL base model, by partially integrating DeepSeek language model capability, Li Xiang said that the 9-month research and development cycle was effectively shortened, saving hundreds of millions of yuan in development costs.

Based on the pre-trained base model, Li Auto further optimizes the technology, and generates a small vehicle end-specific model with 3.60 billion parameters through model distillation technology to meet the deployment requirements of the vehicle computing platform.

Stage II & III:

The final goal of the VLA model trained in the cloud is to be applied on the vehicle platform. Due to the difference between the computing power of the vehicle and the cloud, the cloud model needs to be distilled and optimized by model compression techniques such as pruning and quantization. The specific method of Li Auto is: after completing the training of the VL base model with 32 billion parameters, it is first distilled into a 4 billion parameter model adapted to the computing power conditions of the vehicle. On this basis, reinforcement learning training is carried out to ensure that the model can not only meet the operation requirements of the vehicle computing platform, but also maintain sufficient decision-making ability.

The second and third stages of Li Auto VLA model training - assisted driving post-training and reinforcement learning can be seen as fine-tuning the base model in the field of intelligent driving. Among them, the post-training stage adopts the open-loop imitation learning method of the traditional end-to-end solution, while the reinforcement learning stage combines the open-loop and closed-loop modes to become the core improvement point of VLA model training.

Specifically:

Open-loop reinforcement learning: Using RLHF's reinforcement learning mechanism based on human feedback, the main goal is to adapt driving strategies to human driving habits and safety standards.Li Auto uses the accumulated human takeover vehicle data for training, so that the model clearly distinguishes between "reasonable operation" and "dangerous behavior", and completes the calibration of basic driving logic.

Closed-loop reinforcement learning (RL): High-intensity iterative training of the model by building a world model to generate a large number of virtual training and simulation scenarios. This method breaks the limitation of traditional reliance on real road condition data, greatly reduces the time and cost of actual road testing, and achieves an improvement in training efficiency.

These two phases complete the crucial transition from the basic model to the dedicated driving model by combining "first alignment of human preferences and then deep optimization through virtual scenarios".

III Synergistic Applications of World Models and RL

World models are pivotal for end-to-end autonomous driving training, evaluation, and simulation. They generate realistic synthetic videos from sensor inputs and vehicle states, enabling safe, controlled virtual environments for strategy assessment and physical rule comprehension.

RL Training Mechanism:

The essence of the world model is a model based on neural networks, which can establish a correlation model between environmental states, action choices, and feedback rewards, and directly guide the behavioral decision-making of the agent. In intelligent driving scenarios, this model can generate optimal action strategies based on real-time environmental states. More importantly, it can build a virtual interactive environment close to real dynamics, providing a closed-loop training platform for reinforcement learning - the system continuously receives reward feedback in the simulation environment and continuously optimizes the strategy.

Through this mechanism, the two core capabilities of the end-to-end model are expected to be significantly improved: one is the perception ability, the recognition accuracy and understanding ability of environmental elements such as vehicles, pedestrians, obstacles, etc., and the other is the predictive ability, the predictive accuracy of other traffic participants' behavioral intentions. This whole chain optimization from perception to decision-making is the core value of empowering intelligent driving by the world model.

Huawei's recently released "Qiankun Smart Driving ADS 4" also applies world model technology. In its "World Engine + World Behavior Model (WEWA) " technical architecture, the "World Engine" in the cloud is responsible for generating various extremely rare driving scenarios and converting these scenarios into "training questions" for the intelligent driving system, just like the "question-setting examiner" for the simulation test. The "World Behavior Model" on the vehicle side has full-modal perception and multi-MoE expert decision-making capabilities, acting as a "practical instructor", allowing the intelligent driving system to accumulate experience in dealing with complex scenarios in the simulation environment, and realize the ability to advance from theory to practice.

The cloud world base model recently released by Xpeng takes large language model as the core architecture, completes training through massive high-quality multi-modal driving data, and has the ability of visual semantic understanding, logical chain reasoning, and driving action generation. At present, the team is focusing on the development of a super-large-scale world base model with 72 billion parameter scale. The cloud model constructs the whole process technology link from base model pre-training, reinforcement learning post-training, model distillation, vehicle model pre-training, and deployment on the car. The whole system adopts the technical route of combining reinforcement learning and model distillation, which can efficiently produce end-side deployment models with small volume and high intelligence level.

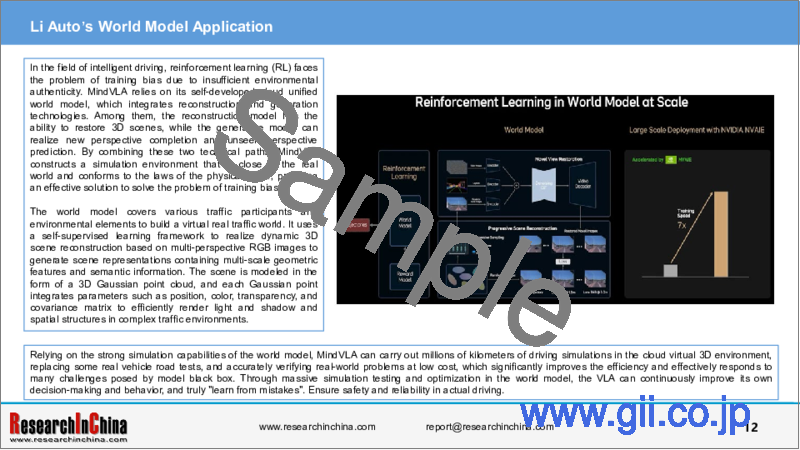

Li Auto's World Model Application:

In the field of intelligent driving, reinforcement learning (RL) faces the problem of training bias due to insufficient environmental authenticity. MindVLA relies on its self-developed cloud unified world model, which integrates reconstruction and generation technologies. Among them, the reconstruction model has the ability to restore 3D scenes, while the generative model can realize new perspective completion and unseen perspective prediction. By combining these two technical paths, MindVLA constructs a simulation environment that is close to the real world and conforms to the laws of the physical world, providing an effective solution to solve the problem of training bias.

The world model covers various traffic participants and environmental elements to build a virtual real traffic world. It uses a self-supervised learning framework to realize dynamic 3D scene reconstruction based on multi-perspective RGB images to generate scene representations containing multi-scale geometric features and semantic information. The scene is modeled in the form of a 3D Gaussian point cloud, and each Gaussian point integrates parameters such as position, color, transparency, and covariance matrix to efficiently render light and shadow and spatial structures in complex traffic environments.

Relying on the strong simulation capabilities of the world model, MindVLA can carry out millions of kilometers of driving simulations in the cloud virtual 3D environment, replacing some real vehicle road tests, and accurately verifying real-world problems at low cost, which significantly improves the efficiency and effectively responds to many challenges posed by model black box. Through massive simulation testing and optimization in the world model, the VLA can continuously improve its own decision-making and behavior, and truly "learn from mistakes". Ensure safety and reliability in actual driving.

Table of Contents

1 Foundation of End-to-end Intelligent Driving Technology

- 1.1 Terminology and Concept of End-to-end Intelligent Driving

- Terminology Explanation of End-to-end Intelligent Driving

- Terminology Explanation of End-to-end Intelligent Driving

- Connection and Difference between End-to-end Related Concepts

- 1.2 Introduction to and Status Quo of End-to-end Intelligent Driving

- 1.2.1 Overview

- Background of End-to-end Intelligent Driving

- Deduction of Impacts of AI Foundation Models on Intelligent Driving Industry Pattern

- Reason for End-to-end Intelligent Driving: Business Value

- Transformer Empowers Autonomous Driving

- Difference between End-to-end Architecture and Traditional Architecture (1)

- Difference between End-to-end Architecture and Traditional Architecture (2)

- End-to-end Architecture Evolution

- End-to-end Architecture Evolution

- End-to-end Autonomous Driving Evolution Path

- Progress in End-to-end Intelligent Driving (1)

- Progress in End-to-end Intelligent Driving (2)

- Progress in End-to-end Intelligent Driving (2)

- Comparison between One Model and Two Model End-to-end Intelligent Driving

- Performance Parameter Comparison between One Model/segmented End-to-end Systems

- Significance of Introducing Multi-modal Models to End-to-end Intelligent Driving

- Problems and Solutions for End-to-end Mass Production: Computing Power Supply/Data Acquisition

- Problems and Solutions for End-to-end Mass Production: Team Building/Explainability

- Progress and Challenges of E2E-AD: Generative World Model + Neural Network Simulator + RL Will Accelerate Innovation

- Perception Layer under End-To-End Architecture

- 1.2.2 End-to-end Model Implementation Modes

- Two Implementation Modes for End-To-End

- End-To-End Implementation Modes: Imitation Learning

- End-to-end Implementation Method: Reinforcement Learning

- Mainstream reinforcement learning algorithms

- 1.2.3 End-to-end Model Verification Modes

- Evaluation Methods for End-to-End Autonomous Driving Datasets

- Closed Loop Benchmarking Platform for Evaluating End-To-End Autonomous Driving Systems (E2E-AD) Capabilities

- Three Major Simulation Tests for End-to-end Autonomous Driving Models (2) - HUGSIM

- Three Major Simulation Tests for End-to-end Autonomous Driving Models (3) - DriveArena

- 1.3 Typical End-to-End Intelligent Driving Cases

- SenseTime UniAD: Path Planning-oriented AI Foundation Model Provides E2E Commercial Scenario Applications (1)

- SenseTime UniAD: Path Planning-oriented AI Foundation Model Provides E2E Commercial Scenario Applications(2)

- Technical Principle and Architecture of SenseTime UniAD

- Technical Principle and Architecture of Horizon Robotics VAD

- Technical Principle and Architecture of Horizon Robotics VADv2

- VADv2 Training

- Technical Principle and Architecture of DriveVLM

- Li Auto Adopts MoE

- MoE and STR2

- Shanghai Qi Zhi Institute's E2E-AD Model SGADS: A Safe and Generalized E2E-AD System Based on Reinforcement Learning and Imitation Learning

- Shanghai Jiao Tong University's E2E Active Learning ActiveAD Case: Breaking the Data Labeling Bottleneck of Intelligent Driving, and Data-driven

- End-to-end Intelligent Driving System Based on Foundation Models

- 1.4 Foundation Models

- 1.4.1 Foundation Models Introduction

- Core of End-to-end System - Foundation Models

- Foundation Models (1) - Large Language Models: Application Case in Intelligent Driving

- Foundation Models (2) - Vision Foundation Models: Application in Intelligent Driving

- Foundation Models (2) - Vision Foundation Models: Latent Diffusion Model Framework

- Foundation Models (2) - Vision Foundation Models: Wayve GAIA-1

- Foundation Models (2) - Vision Foundation Models: DriveDreamer Framework

- Foundation Models (3) - Large Multimodal Models: MFM

- Foundation Models (3) - Large Multimodal Models: Application of GPT-4V in Intelligent Driving

- 1.4.2 Foundation Models: Large Multimodal Models

- Development of and Introduction to Large Multimodal Models

- Large Multimodal Models VS Single-modal Foundation Models (1)

- Large Multimodal Models VS Single-modal Foundation Models (2)

- Technology Panorama of Large Multimodal Models

- Multimodal Information Representation

- 1.4.3 Foundation Models: Multimodal Large Language Models

- Multimodal Large Language Models (MLLMs)

- Architecture and Core Components of MLLMs

- MLLMs - Mainstream Models

- Application of MLLMs in Intelligent Driving

- Clip Model

- LLaVA Model

- 1.5 VLM & VLA

- Application of Vision-Language Models (VLMs)

- Development History of VLMs

- Architecture of VLMs

- Application Principle of VLMs in End-to-end Intelligent Driving

- Application of VLMs in End-to-end Intelligent Driving

- VLM->VLA

- VLA (Vision-Language-Action Model)

- VLA Principle

- Classification of VLA Models

- VLA Is Not Yet Mature, and Waymo EMMA Is Relatively Complete Overall

- Core Functions of End-to-end Multimodal Model for Intelligent Driving (EMMA)

- 1.6 World Models

- Definition and Application

- Basic Architecture

- Difficulties in Framework Setting and Implementation

- Video Generation Methods based on Transformer and Diffusion Model

- WorldDreamer's Technical Principle and Path

- World Model May Be One of the Ideal Ways to Achieve End-to-end

- Generation of Virtual Training Data

- Tesla's World Model

- Nvidia

- InfinityDrive: Breaking Time Limits in Driving World Models

- Parameter Performance of SenseAuto InfinityDrive

- SenseAuto InfinityDrive Pipeline

- SenseTime DiT Architecture and Main Indicators (FID/FV) for Evaluating Video Generation

- 1.7 Comparison between E2E-AD Motion Planning Models

- E2E-AD Trajectory Planning: Comparison between Several Classical Models in Industry and Academia

- Tesla: Perception and Decision Full Stack Integrated Model

- Tesla: Perception and Decision Full Stack Integrated Model

- Momenta: End-to-end Planning Architecture Based on BEV Space

- Horizon Robotics 2023: End-to-end Planning Architecture Based on BEV Space

- DriveIRL: End-to-end Planning Architecture Based on BEV Space

- GenAD: Generative End-to-end Model

- GenAD: Generative End-to-end Model

- 1.8 VLA Model

- VLA Concept

- One Core of End-to-end: Large Language Models

- VLA Technical Architecture and Key Technologies

- Advantages of VLA (1)

- Advantages of VLA (2)

- Advantages of VLA (2)

- Challenges in VLA Model Deployment (1)

- Real-time and Memory Usage Challenges for VLA Model Deployment

- Challenges in VLA Model Deployment (2)

- Challenges of End-to-end Deployment - Data ( 2 )

- Challenges of VLA Model Deployment - Planning Capabilities for Long Time Series Tasks

- 1.9 Diffusion Models

- Four Mainstream Generation Models

- Diffusion Model Principle

- Diffusion Model Optimizes the Core Links of Autonomous Driving Trajectory Generation

- Diffusion Model Optimizes Autonomous Driving Trajectory Generation

- Application of Diffusion Model in the Field of Assisted Driving

- Practical Application Cases of Diffusion Model

- 1.10 Embodied Language Models (ELM)

- ELMs Accelerate the Implementation of End-to-end Solutions

- ELMs Accelerate the Implementation of End-to-end Solutions

- Application Scenarios (1)

- Application Scenarios (2) Data Close Loop

- Application Scenarios (3) Data Collecting

- Application Scenarios (4) Location/ Description Labeling

- Application Scenarios (5) Token Selection

- Application Scenarios (6) Benchmark

- Application Scenarios (7) Experiments

- Application Scenarios (7) Experiments

- Limitations and Positive Impacts

2 Technology Roadmap and Development Trends of E2E-AD

- 2.1 Technology Trends of End-to-End Intelligent Driving

- Trend 1 - Paradigm Revolution in Intelligent Driving ADS: 2024 Can be Considered as the First Year of E2E-AD (E2E-AD)

- Trend 2 - Major Development Frameworks of AGI: Robot and Intelligent Driving will be the Two Mainstream E2E Application Scenarios (1)

- Trend 2 - Major Development Frameworks of AGI: Robot and Intelligent Driving will be the Two Mainstream E2E Application Scenarios (2)

- Trend 2 - Major Development Frameworks of AGI: Robot and Intelligent Driving will be the Two Mainstream E2E Application Scenarios (3)

- Trend 3: Development Direction of E2E-AD Is to Achieve Humanized Driving

- Trend 4: Generative AI and E2E-AD Fused and Innovate, Scale of Data and Model Parameters Further Unleashes Potential of Basic Models (1)

- Trend 4: Generative AI and E2E-AD Fused and Innovate, Scale of Data and Model Parameters Further Unleashes Potential of Basic Models (2)

- Trend 5: E2E-AD Requires Higher Costs and Computing Power

- Trend 6: General World Model is One of the Best Implementation Paths for Intelligent Driving (1)

- Trend 6: General World Model is One of the Best Implementation Paths for Intelligent Driving (2)

- Trend 6: General World Model is One of the Best Implementation Paths for Intelligent Driving (3)

- Trend 7 - End-to-end Test Begins to Move from Open to Closed Loop

- Trend 8: Application Ideas and Implementation Pace of Foundation Model in E2E-AD

- End-to-end Trend 2: Foundation Model

- End-to-end Trend 3: Zero-shot Learning

- 2.2 Market Trends of End-to-End Intelligent Driving

- Layout of Mainstream E2E System Solutions

- Comparison of End-to-end System Solution Layout between Tier 1 Suppliers (1)

- Comparison of End-to-end System Solution Layout between Tier 1 Suppliers (2)

- Comparison of End-to-end System Solution Layout between Tier 1 Suppliers (3)

- Comparison of End-to-end System Solution Layout between Other Intelligent Driving Companies

- Comparison of End-to-end System Solution Layout between OEMs (1)

- Comparison of End-to-end System Solution Layout between OEMs (2)

- Comparison of End-to-end System Solution Layout between OEMs (3)

- Comparison of End-to-end System Solution Layout between Other Intelligent Driving Companies

- Comparison of End-to-end System Solution Layout between OEMs (1)

- Comparison of End-to-end System Solution Layout between OEMs (2)

- Comparison of End-to-end System Solution Layout between OEMs (3)

- E2E System Enables Leading OEMs to Implement Map-free City NOA on a Large Scale

- Comparison 1 of NOA and End-to-end Implementation Schedules between Sub-brands of Domestic Mainstream OEMs: Changan, GWM, BYD

- Comparison 2 of NOA and End-to-end Implementation Schedules between Sub-brands of Domestic Mainstream OEMs :FAW, GAC and Geely

- Comparison 3 of NOA and End-to-end Implementation Schedules between Sub-brands of Domestic Mainstream OEMs : BAIC, SAIC, Chery and Dongfeng

- Comparison 4 of NOA and End-to-end Implementation Schedules between Sub-brands of Domestic Mainstream OEMs: NIO, Xpeng Motors, Li Auto, Xiaomi and Leapmotor

- 2.3 End-to-end Intelligent Driving Team Building

- Impacts of End-to-end Foundation Models on Organizational Structure (1)

- Impacts of End-to-end Foundation Models on Organizational Structure (2)

- Leading People in End-to-end Intelligent Driving For Domestic OEMs and Suppliers

- E2E-AD Team Building of Domestic OEMs (1): XIAOMI

- End-to-end Intelligent Driving Team Building of Domestic OEMs: Baidu /Li Auto

- End-to-end Intelligent Driving Team Building of Domestic OEMs: Xiaomi Auto /Baidu

- End-to-end Intelligent Driving Team Building of Domestic OEMs: Li Auto

- E2E-AD Team Building of Domestic OEMs (4): Li Auto

- E2E-AD Team Building of Domestic OEMs (5): XPeng

- End-to-end Intelligent Driving Team Building of Domestic OEMs: BYD

- End-to-end Intelligent Driving Team Building of Domestic OEMs: NIO

- Team Building of End-to-end Intelligent Driving Suppliers: Momenta

- Team Building of End-to-end Intelligent Driving Suppliers: DeepRoute.ai

- End-to-end Intelligent Driving Team Building of Domestic OEMs: Huawei

- Team Building of End-to-end Intelligent Driving Suppliers: Zhuoyu Technology

- Team Building of End-to-end Intelligent Driving Suppliers: Horizon Robotics

3 End-to-end Intelligent Driving Suppliers

- 3.1 MOMENTA

- Profile

- R6 Flywheel Large Model to be Released in 2025H2

- One-model End-to-end Solutions (1)

- One-model End-to-end Solutions (2)

- End-to-end Planning Architecture

- One-model End-to-end Mass Production Empowers the Large-scale Implementation of NOA in Mapless Cities

- High-level Intelligent Driving and End-to-end Mass Production Customers

- Mass Production and Clients

- 3.2 DeepRoute.ai

- Product Layout and Strategic Deployment

- End-to-end Layout

- Difference between End-to-end Solutions and Traditional Solutions

- Implementation Progress in End-to-end Solutions

- DeepRoute.ai Announces Deep Cooperation with Volcano Engine

- RoadAGI Platform-AI Spark

- DeepRoute.ai VLA Model Architecture

- End-to-end VLA Model Analysis

- Designated End-to-end Mass Production Projects and VLA Model Features

- Mass Production of DeepRoute.ai's End-to-end Solutions

- Hierarchical Hint Tokens

- End-to-end Training Solution - DINOv2

- Application Value of DINOv2 in the Field of Computer Vision

- Intelligent Driving VQA Task Evaluation Data Sets

- BLEU (Bi-Lingual Evaluation Understudy) and Consensus-based Image Description Evaluation (CIDEr)

- Score Comparison between HoP and Huawei

- 3.3 Huawei

- End-to-end Evolution Path

- ADS 4's All-New WEWA Architecture

- Deep Integration of ADS 4 with XMC and Cloud-based Simulation Validation

- ADS 4: Highway L3 Commercial Solution

- End-to-End Mass Production Status

- Development History of Huawei's Intelligent Automotive Solution Business Unit

- ADS 2.0 (1): End-to-end Concept and Perception Algorithm of ADS

- ADS 2.0 (2): End-to-end Concept and Perception Algorithm of ADS

- Summary of Huawei ADS 2.0

- ADS 3.0 (1)

- ADS 3.0 (2): End-to-end

- ADS 3.0 (3): End-to-end

- ADS 3.0 (3): ASD 3.0 VS. ASD 2.0

- End-to-end Solution Application Cases of ADS 3.0 (1): STELATO S9

- End-to-end Solution Application Cases of ADS 3.0 (2): LUXEED R7

- End-to-end Solution Application Cases of ADS 3.0 (3): AITO

- End-to-end Intelligent Driving Solutions of Multimodal LLMs

- End-to-end Testing-VQA Tasks

- Architecture of DriveGPT4

- End-to-end Training Solution Examples

- The Training of DriveGPT4 Is Divided Into Two Stages

- Comparison between DriveGPT4 and GPT4V

- 3.4 Horizon Robotics

- Profile

- Main Partners

- Urban Driving Assistance System HSD

- Journey 6 Series Chips

- UMGen: Unified Multimodal Driving Scene Generation Framework

- GoalFlow: Goal Point Driven, Unlocking Future End-To-End Generative Policies

- MomAD: Momentum-Aware Planning for End-To-End Autonomous Driving

- DiffusionDrive: Towards Generative Multimodal End-To-End Autonomous Driving

- RAD: End-To-End Reinforcement Learning Post-Training Paradigm Based On 3DGS Digital Twin World

- Mass Production

- End-to-end Super Drive and Its Advantages

- Architecture and Technical Principle of Super Drive

- Senna Intelligent Driving System (Foundation Model + End-to-end)

- Core Technology and Training Method of Senna

- Core Module of Senna

- 3.5 Zhuoyu Technology

- Profile

- R&D and Production

- Evolution of ClixPilot End-To-End Algorithm

- Architecture of End-To-End World Model

- Two-Phase Training Model of End-To-End World Model

- Core Functions of Generative Intelligent Driving GenDrive

- Key Technologies of Generative Intelligent Driving

- Mass Production of End-To-End

- Two-model End-to-end Parsing

- One-model Explainable End-to-end Parsing

- End-to-end Mass Production Customers

- 3.6 NVIDIA

- Profile

- Hydra-MDP++: NVIDIA's End-to-End Autonomous Driving Framework Combining Human Demonstrations and Rule-Based Expert Knowledge

- World Foundation Model Development Platform - Cosmos

- Cosmos Training Paradigm

- Intelligent Driving Solution

- DRIVE Thor

- Basic Platform for Intelligent Driving: NVIDIA DriveOS

- Core Design Philosophy of NVIDIA Multicast

- Drive Thor

- Latest End-to-end Intelligent Driving Framework: Hydra-MDP

- Self-developed Model Architecture: Model Room

- IM, NVIDIA and Momenta Collaborate to Create a Production-ready Intelligent Driving Solution Based on Thor

- Latest End-to-end Intelligent Driving Framework: Hydra-MDP

- 3.7 Bosch

- Vertical and Horizontal Assisted Driving Solution

- Urban Assisted Driving Solution Based on End-to-End Model

- End-to-End Mass Production Status

- Intelligent Driving China Strategic Layout (1)

- Based on the End-to-end Development Trend, Bosch Intelligent Driving initiates the Organizational Structure Reform

- After Launching the BEV+Transformer High-level Intelligent Driving Solution, Bosch Accelerates Its End-to-end Intelligent Driving Layout

- Intelligent Driving Algorithm Evolution Planning

- 3.8 Baidu

- DriVerse: Novel Navigation World Model Enabled by Multimodal Trajectory Prompts and Motion Alignment

- Profile of Apollo

- Strategic Layout in the Field of Intelligent Driving

- Two-model End-to-end: Adopting Strategy of Segmented Training before Joint Training

- Production Models Based on Two-model End-to-end Technology Architecture: Jiyue 07

- Baidu Auto Cloud 3.0 Enables End-to-end Systems from Three Aspects

- 3.9 SenseAuto

- Profile

- Evolution of Intelligent Driving End-To-End Algorithm

- Architecture of R-UniAD

- R-UniAD Practical Demonstration: Complex Scenario Mining, 4D Simulation Reproduction, Reinforcement Learning, Generalization Verification

- Kaiwu World Model 2.0

- Mass Production

- UniAD End-to-end Solution

- DriveAGI: The Next-generation Intelligent Driving Foundation Model and Its Advantages

- DiFSD: SenseAuto's End-to-end Intelligent Driving System That Simulates Human Driving Behavior

- DiFSD: Technical Interpretation

- 3.10 QCraft

- Profile

- "Safe End-to-End" Architecture

- "Safe End-to-End" Data and Model Training Closed Loop

- Driven-by-QCraft Mid-to-High Level ADAS Solutions

- End-to-End Mass Production Status

- "Driven-by-QCraft" High-level Intelligent Driving Solution

- End-to-end Layout

- Advantages of End-to-end Layout

- 3.11 Wayve

- Profile

- Advantages of AV 2.0

- GAIA-1 World Model - Architecture

- GAIA-1 World Model - Token

- GAIA-1 World Model - Generation Effect

- LINGO-2

- 3.12 Waymo

- Waymo Foundation Model

- End-to-end Multimodal Model for Intelligent Driving (EMMA)

- EMMA Analysis: Multimodal Input

- EMMA Analysis: Defining Driving Tasks as Visual Q&A

- EMMA Analysis: Introducing Thinking Chain Reasoning to Enhance Interpretability

- Limitations of EMMA

- 3.13 GigaAI

- Profile

- World Model Evolution

- 4D Generative World Model Layered Architecture

- World Model Implementation

- ReconDreamer

- DriveDreamer

- DriveDreamer4D

- 3.14 LightWheel AI

- Profile

- Data Requirements for End-to-End Architecture

- Tsinghua x LightWheel AI: Autonomous Driving World Model for Generating and Understanding Accident Scenarios

- Core Technology Stack: Real2Sim2Real + Realism Validation

- Data Annotation and Synthetic Data

- 3.15 PhiGent Robotics

- Profile

- Full-Domain End-To-End Driving Assistance Solution PhiGo Max

- Detailed Explanation of Progressive One-Model End-To-End Solution Based on End-To-End Technology Paradigm

- Detailed Explanation of End-To-End Technology

- 3.16 Nullmax

- Profile

- MaxDrive Driving Assistance Solution

- Next-Generation Autonomous Driving Technology - Nullmax Intelligence

- End-To-End Technology Architecture

- End-To-End Data Platform

- HiP-AD: Nullmax's End-To-End Autonomous Driving Framework Based on Multi-Granularity Planning and Deformable Attention

- Mass Production

- 3.17 Mobileye

- Profile

- CAIS (Composite AI System) Route

- Surround ADAS(TM)

- SuperVision(TM)/Chauffeur

- Production

- 3.18 Motovis

- Profile

- Full-stack End-to-end Intelligent Driving System

- Production

4 End-to-end Intelligent Driving Layout of OEMs

- 4.1 Xpeng's End-to-end Intelligent Driving Layout

- End-to-end System Evolution Path

- World Foundation Model

- Core Technical Approach of the World Foundation Model

- Cloud Model Factory

- Three Phased Achievements in World Foundation Model Development

- End-to-end System (1): Architecture

- End-to-end System (2): Intelligent Driving Model

- End-to-end System (3): AI+XNGP

- End-to-End System (4): Organizational Transformation

- Data Collection, Annotation and Training

- 4.2 Li Auto's End-to-end Intelligent Driving Layout

- End-To-End Evolution

- From E2E+VLM Dual System to MindVLA

- Architecture of MindVLA Model

- Key Technology Point 1 of MindVLA: Great 3D Physical Space Understanding Capability

- Key Technology Point 2 of MindVLA: Combination with LLM

- Key Technology Point 3 of MindVLA: Combination of Diffusion and RLHF

- Key Technology Point 4 of MindVLA: World Model and INVADE-Accelerated Reinforcement Learning

- End-to-end Solutions (1): Iteration of System 1

- End-to-end Solutions (2): System 1 (end-to-end model) + System 2 (VLM)

- End-to-end Solutions (3): Next-generation Intelligent Driving Technology Architecture

- End-to-end Solutions (4): DriveMLM: Architecture

- End-to-end Solutions (5): DriveMLM: Rendering Effects

- End-to-end Solutions (6): DriveVLM: Processing of BEV and Text Features

- End-to-end Solutions (7):L3 Intelligent Driving

- End-to-end Solutions (8): Build a Complete Foundation Model from AD Max 3.0

- Technical Layout: Chip

- Technical Layout: Data Closed Loop

- 4.3 Tesla's End-to-end Intelligent Driving Layout

- Interpretation of the 2024 AI Conference

- Development History of AD Algorithms

- End-to-end Process 2023-2024

- Development History of AD Algorithms (1):Focus on Perception

- Development History of AD Algorithms (2): Shadow Mode

- Development History of AD Algorithms (3): Introduction of Occupancy Network

- Development History of AD Algorithms (4): Occupancy Network

- Development History of AD Algorithms (5): HydraNet

- Development History of AD Algorithms (6):FSD V12

- Tesla: Core Elements of the Full-stack Perception and Decision Integrated Model

- "End-to-end" Algorithms

- World Models

- Data Engines

- Dojo Supercomputing Center: Overview

- Dojo Supercomputing Center: D1 Chip-integrated Training Tile

- Dojo Supercomputing Center: Computing Power Development Planning

- Zeron Won Runner-up in International End-to-End Ground Challenge

- 4.4 Zeron's End-to-end Intelligent Driving Layout

- Profile

- End-to-end Intelligent Driving System Based on Foundation Models

- Advantages of End-to-end Driving System

- 4.5 Geely & ZEEKR's End-to-end Intelligent Driving Layout

- One-Model End-to-End Large Model

- L3 Intelligent Driving Technology Architecture

- "Qianli Haohan" Advanced Intelligent Driving Solutions

- Geely's ADAS Technology Layout: Geely Xingrui Intelligent Computing Center

- Xingrui AI Foundation Model

- Application of Geely's Intelligent Driving Foundation Model Technology

- Zeekr Overview

- ZEEKR's End-to-end System: Two-model Solution

- ZEEKR Officially Released End-to-end Plus

- Examples of Models with ZEEKR's End-to-end System

- 4.6 Xiaomi Auto's End-to-end Intelligent Driving Layout

- Profile

- End-to-End VLA Autonomous Driving Solution Orion

- Analysis of the ORION Framework

- Physical World Modeling Architecture

- Multi-stage End-to-end Modeling Of Three Layers

- Long Video Generation Framework-MiLA

- End-to-end Technology Enables All-scenario Intelligent Driving from Parking Spaces to Parking Spaces

- Road Foundation Models Build HD Maps through Road Topology

- New-generation HAD Accesses End-to-end System

- End-to-end Technology Route:end-to-end +VLM

- 4.7 NIO's End-to-end Intelligent Driving Layout

- Intelligent Driving R&D Team Reorganization with an Organizational Structure Oriented Towards End-to-end System

- From Modeling to End-to-end, World Models Are the Next

- World Model End-to-end System - NWM (NIO World Model)

- Intelligent Driving Architecture: NADArch 2.0

- End-to-end R&D Tool Chain

- Imagination, Reconstruction and Group Intelligence of World Models

- Nsim (NIO Simulation)

- Software and Hardware Synergy Capabilities Continue to Strengthen, Moving towards the End-to-end System Era

- 4.8 Changan Automobile's End-to-end Intelligent Driving Layout

- Beidou Tianshu 2.0 - Tianshu Intelligent Driving

- Tianshu Intelligent Driving Software Architecture

- Brand Layout

- ADAS Strategy: "Beidou Tianshu"

- End-to-end System (1):BEV+LLM+GoT

- Production Models with End-to-end System: Changan NEVO E07

- Production Models with End-to-end System: Changan NEVO E07

- 4.9 Mercedes-Benz's End-to-end Intelligent Driving Layout

- Mercedes-Benz and Momenta team up to develop driver assistance systems

- Brand New "Vision-only Solutions without Maps, L2++ All-scenario High-level Intelligent Driving Functions"

- Brand New Self-developed MB.OS

- Cooperation with Momenta

- 4.10 Chery's End-to-end Intelligent Driving Layout

- Profile of ZDRIVE.AI

- Five Intelligent Driving Technologies

- E2E Autonomous Driving Architecture and Advantages

- E2E Autonomous Driving Digital Assessment Technology

- Falcon Intelligence Driving E2E Solution

- Falcon Solution Models and Future Planning

- Chery's End-to-end System Development Planning

- 4.11 GAC

- X-Soul Zhixing E2E Embodied Reasoning Model Architecture

- Core Technologies

- Intelligent Diviring Product Platform

- 4.12 Leapmotor

- E2E Intelligent Driving

- E2E Intelligent Driving Application Scenario

- 4.13 IM Motors

- Iteration History of Intelligent Driving System

- Cooperation with Momenta

- IM AD E2E 2.0 Large Model

- IM AD E2E 2.0 Intelligent Driving Large Model Technology

- IM AD E2E 2.0 Intelligent Driving Large Model Application Scenario

- 4.14 Hongqi

- Hongqi Sinan Intelligent Driving Technology Architecture

- Sinan E2E Large Model Technology

- Sinan Intelligent Driving Solution

- Sinan Intelligent Driving Solution Models and Future Planning