|

|

市場調査レポート

商品コード

1457877

AI基盤モデルの車両インテリジェント設計への影響と開発(2024年)AI Foundation Models' Impacts on Vehicle Intelligent Design and Development Research Report, 2024 |

||||||

|

|||||||

| AI基盤モデルの車両インテリジェント設計への影響と開発(2024年) |

|

出版日: 2024年03月10日

発行: ResearchInChina

ページ情報: 英文 160 Pages

納期: 即日から翌営業日

|

全表示

- 概要

- 目次

AI基盤モデルが活況を呈しています。ChapGPTやSORAの登場は衝撃的です。AIフロンティアの科学者や起業家は、AI基盤モデルがあらゆる生活、特に技術関連分野を再構築すると指摘しています。技術製品として、インテリジェントカーはAI基盤モデルによってどのように変わるのか。

基盤モデルはどのようにインテリジェントカーを再構築するか

2023年、Changan AutomobileはL1~L6層を含む独自のソフトウェア駆動型アーキテクチャ(SDA)にAIエッジとAIサービス層を追加しました。AI技術はインテリジェントカーのL3 EEA層、L4車両OS層、L6車両機能用途層(コックピット、接続性、インテリジェントドライビングを含む)、L7クラウドビッグデータ層などのほとんどの層に影響を及ぼしていることが分かります。L1機械レイヤーのシャーシ部分とL2動力レイヤーのバッテリー部分は、実際にAI応用に関与しています。

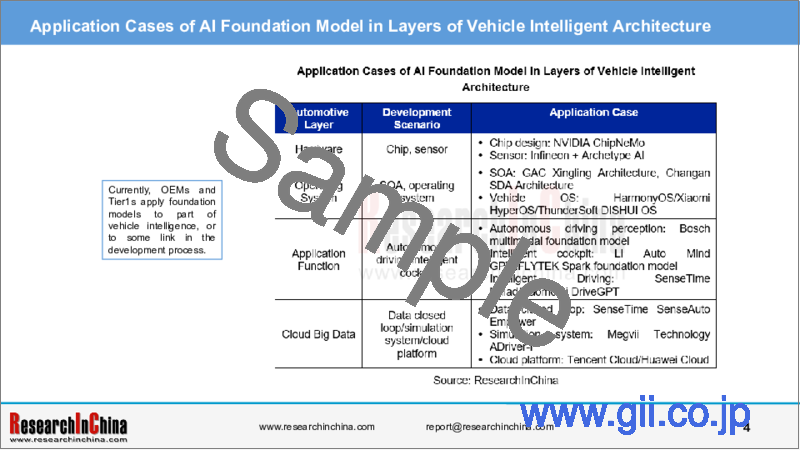

現在、OEMとTier 1は、基盤モデルを車両インテリジェンスの一部、または開発プロセスの一部のリンクに利用しています。

自動車におけるAI基盤モデルの一般的な応用動向を見る際、基盤モデルの進化にも見当をつける必要があります。Tencent Research Instituteの調査結果によると、AIは脳からAI Agentへ、CoPilotから自動運転へと進化します。

では、AI Agentとは何か

基盤モデル/AI AgentはOS/APPに取って代わるのか

ResearchInChinaは以下の見解を受け入れています。AI基盤モデルはOSであり、AI Agentはアプリケーションです。インテリジェント製品の開発パラダイムは、従来のOS-APPのエコシステムパラダイムから、AI基盤モデル-AI Agentのエコシステムパラダイムに変化します。

AI Agentとは、単純なテキスト生成を超えたAIシステムです。AI Agentは、大規模言語モデル(LLM)をコアコンピューティングエンジンとして使用し、会話、タスクの実行、推論を行い、ある程度の自律性を持つことができます。つまり、AI Agentは複雑な推論能力、メモリ、タスク実行方法を持つシステムです。したがって、NIOのコックピットに搭載されたNOMI GPTとTeslaFSD V12が、それぞれコックピット領域とインテリジェントドライビング領域におけるAI Agentであることは明らかです。

プラットフォームレベルのAI技術であるAI基盤モデルには、ChatGPTやERNIE Botのような一流技術企業が立ち上げたものが含まれます。プラットフォームレベルのAIは、オペレーティングシステムをあらゆる面で強化する技術基盤となります。これは次世代OSの新しいカーネルとみなされています。従来のオペレーティングシステムのカーネルは主に、GPUやメモリなどのシステムのハードウェアリソースの管理とスケジューリングを担当し、システムの正常な動作と効率的な利用を保証しています。しかし、ユーザーの要求が高まるにつれ、AIシステムは人間に関連する多くのパーソナライズされた経験を解析する必要があります。

個人的知識ベース、人々の位置やステータスの認識、人々の習慣や趣味、その他の個別化要素については、従来のオペレーティングシステムでは効果的な計算や処理ができません。そのため、これらの要件を満たすための全く新しいカーネルが必要となります。プラットフォームレベルのAI基盤モデルの強みは、複数の個人的要素を管理および処理し、オペレーティングシステムがユーザーの意図を正確に認識できることです。このような機能により、新しいオペレーティングシステムは「何を望んでいるかを推測し、何が必要かを理解する」というインテリジェントな体験をすべての人にもたらすことができます。

当レポートでは、中国の自動車産業について調査分析し、AI基盤モデル現状と将来の動向、自動車の設計に与える影響、応用事例などの情報を提供しています。

目次

第1章 AI基盤モデルの現状と将来の動向

- AI基盤モデル応用のイントロダクション

- 現在の用途

- Sora、テキストビデオ変換の基盤モデル

- サマリー

第2章 車両ハードウェア層に対するAI基盤モデルの影響

- チップ設計と機能に対するAI基盤モデルの影響

- ADASセンサーと認識システム開発に対するAI基盤モデルの影響

第3章 自動車SOA/オペレーティングシステムに対するAI基盤モデルの影響

- SOA/EEアーキテクチャに対するAI基盤モデルの影響

- OSの設計と開発に対するAI基盤モデルの影響

第4章 自動車データクローズドループ/シミュレーションシステムに対するAI基盤モデルの影響

- データクローズドループに対するAI基盤モデルの影響

- シミュレーションシステムに対するAI基盤モデルの影響

第5章 AI基盤モデルの自動運転/インテリジェントコックピットに対する影響

- 自動運転に対するAI基盤モデルの影響

- 自動運転におけるAI基盤モデルの応用事例

- コックピットドメインコントローラーに対するAI基盤モデルの影響

第6章 AI Agentと自動車

- AI Agentとは

- AI Agentの開発方向

- インテリジェントビークル向けAI Agentの応用動向

- 車両へのAI Agentの応用事例

AI foundation models are booming. The launch of ChapGPT and SORA is shocking. Scientists and entrepreneurs at AI frontier point out that AI foundation models will rebuild all walks of life, especially tech-related fields. As a technological product, how will intelligent vehicles be changed by AI foundation models?

How foundation models will rebuild intelligent vehicles?

Following the "Automotive AI Foundation Model Technology and Application Trends Report, 2023-2024", a report which discusses impacts of AI foundation models on automotive industry from a macro perspective, ResearchInChina released the "AI Foundation Models' Impacts on Vehicle Intelligent Design and Development Research Report, 2024", the second report which researches the impacts of AI foundation models on vehicle intelligent design and development in the such aspects as hardware, operating system, application function, and cloud big data.

In 2023, Changan Automobile added AI edge and AI service layer to the original software-driven architecture (SDA) that includes L1-L6 layers. It can be seen that AI technology has affected most layers of intelligent vehicles: L3 EEA layer, L4 vehicle OS layer, L6 vehicle function application layer (including cockpit, connectivity and intelligent driving), L7 cloud big data layer, etc. The chassis part of L1 mechanical layer and the battery part of L2 power layer have actually involved AI application.

Currently, OEMs and Tier1s apply foundation models to part of vehicle intelligence, or to some link in the development process.

When viewing the general application trend of AI foundation models in vehicles, we also need to find an idea in the evolution of foundation models. According to the results of Tencent Research Institute, AI will evolve from the brain to AI Agent, and from CoPilot to autonomous driving.

So, what is AI Agent?

Will foundation model/AI Agent replace OS/APP?

ResearchInChina accepts the view: AI foundation model is the OS, and AI Agent is the application. The development paradigm of intelligent products will be changed from conventional OS-APP ecosystem paradigm to AI foundation model-AI Agent ecosystem paradigm.

What is AI Agent? It is an artificial intelligence (AI) system beyond simple text generation. AI Agent uses a large language model (LLM) as its core computing engine, so that it can make conversations, perform tasks, make inferences, and have a degree of autonomy. In short, AI Agent is a system with complex reasoning capabilities, memory and task execution methods. It is thus clear that NOMI GPT in NIO's cockpit and Tesla FSD V12 are AI Agents in the cockpit domain and intelligent driving domain, respectively.

AI foundation models, a platform-level AI technology, include those launched by first-tier technology companies, such as ChatGPT and ERNIE Bot. Platform-level AI can serve as the technological foundation to empower operating systems in all aspects. It is regarded as the new kernel of next-generation operating systems. The kernel of conventional operating systems is mainly responsible for managing and scheduling the system's hardware resources like GPU and memory to ensure normal operation and efficient utilization of system. Yet with increasing user demand, AI systems need to parse many human-related personalized experiences.

For personal knowledge base, people's location and status awareness, people's habits and hobbies and other personalization factors, conventional operating systems fall short of effective calculation and processing. We thus need a brand-new kernel to meet these requirements. The strength of platform-level AI foundation models is that they can manage and process multiple personal factors and help the operating system accurately recognize user intents. With such capabilities, fire-new operating systems can bring everyone an intelligent experience of "guess what you want and understand what you need."

In automotive cockpit applications, to achieve true personalization, automakers also need to further customize the AI foundation model according to the features of their own vehicle models and services, that is, AI Agent based on platform-level AI foundation model. We can see that Geely models (such as Jiyue and Galaxy) are based on Baidu ERNIE Bot-based cockpit systems, and Mercedes-Benz's in-car voice assistant are actually an AI Agent after being connected to ChatGPT.

At present, intelligent driving AI Agent and cockpit AI Agent are separate. As cockpit-driving integration develops, they will tend to be integrated. However when considering cockpit-driving integration, OEMs and Tier1s cannot only consider integration at the hardware level, but also need to take into account operating system and vehicle system architecture, especially rapid evolution of foundation models/AI Agent models.

Foundation model/AI Agent is currently a part of an operating system/APP ecosystem. Will it replace operating systems/APP models in the future? We think it's possible.

Foundation model-based agents will not only allow everyone to have an exclusive intelligent assistant with enhanced capabilities, but also change the mode of human-machine cooperation and bring broader human-machine fusion. There are three human-AI cooperation modes: Embedding, Copilot, and Agent.

In intelligent driving, the Embedding mode is equivalent to L1-L2 autonomous driving; the Copilot mode, L2.5 and highway NOA; the Agent mode, urban NOA and L3 autonomous driving.

In the Agent mode, humans set goals and provide necessary resources (e.g., computing power), then AI independently undertakes most of tasks, and finally humans supervise the process and evaluate the final results. In this mode, AI fully embodies the interactive, autonomous and adaptable characteristics of Agents and is close to an independent actor, while humans play more of a supervisor and evaluator role.

A large number of interactive operations that were originally enabled via IVI APP can now be achieved through natural interactions (voice, gesture, etc.) in the AI Agent mode. AI Agent even actively observes the inside and outside of the vehicle, makes a request inquiry, and can perform a task after being confirmed by the user.

Therefore, the development of AI Agent is bound to make a mass of previous apps unnecessary and will have a disruptive impact on the development and application of intelligent cockpit and intelligent driving.

The current AI foundation models are not an operating system, but a paradigm and architecture of AI models, focusing on how to enable machines to process multimodal data (text, image, video, etc.). AI Agent is more similar to an AI application or application layer, which requires the support of the underlying operating system and hardware for operation. It is not in itself responsible for the basic management and resource scheduling of the computer system. In the future, AI foundation models are likely to be combined with OS to become AIOS.

AI foundation models and AI Agent development have the following impacts on future operating systems:

Applets will disappear or evolve into AI Agent that calls foundation models;

OS may evolve into the foundation model + computing chip core cluster OS architecture;

AI foundation models as a platform redefine and empower all kinds of industrial application scenarios, and give rise to more human-computer interaction-centric native applications, including autonomous vehicles, robots and digital twin applications.

Table of Contents

1 Current Application and Future Trends of AI Foundation Models

- 1.1 Introduction to AI Foundation Model Application

- 1.1.1 Introduction to Various Types of AI Models

- 1.1.2 Multimodal Foundation Model VLM: Generic Architecture and Evolution Trends

- 1.1.3 Evolution Trends of Foundation Models Understanding 3D Road Scenarios

- 1.1.4 Summary of Evolution Trends of Multimodal Foundation Models Understanding Intelligent Vehicle Driving Road Scenarios

- 1.2 Current Application

- 1.2.1 Classification of AI Foundation Model Applications

- 1.2.2 Current Application of AI Foundation Models: Suppliers

- 1.2.3 Current Application of AI Foundation Models: OEMs

- 1.2.4 Application of AI Foundation Models in Different Vehicle Layers

- 1.2.5 Application Cases of AI Foundation Models in Different Scenarios

- 1.3 Sora Text-to-Video Foundation Model

- 1.3.1 Autonomous Driving (AD) Foundation Model: World Model and Video Generation

- 1.3.2 Visual Foundation Model: Historical Review and Comparative Analysis

- 1.3.3 Sora: Fundamental and Social Value

- 1.3.4 Sora: Introduction to the Basic System

- 1.3.5 Sora: Basic Functions

- 1.3.6 Sora: Advantages and Limitations

- 1.3.7 Sora: Case Studies

- 1.3.8 Interpretation of Sora Module (1)

- 1.3.9 Interpretation of Sora Module (2)

- 1.3.10 Interpretation of Sora Module (3)

- 1.3.11 Interpretation of Sora Module (4)

- 1.3.12 Sora vs GPT-4: Comparative Analysis of Computing Power

- 1.3.13 Sora: Prediction for How to Drive Autonomous Driving Industry

- 1.4 Summary

- 1.4.1 AI Foundation Models Lead to Emergence Effects

- 1.4.2 Advantages of AI Foundation Models over Conventional AD Models

- 1.4.3 Impacts of AI Foundation Models on Operating Systems

- 1.4.4 Impacts of AI Foundation models on SOA/Simulation Design/SoC Design

- 1.4.5 Impacts of AI Foundation Models on Autonomous Driving Development

- 1.4.6 AI Foundation Model Evolution Trend 1

- 1.4.7 AI Foundation Model Evolution Trend 2

- 1.4.8 Enduring Problems of AI Foundation Models in Intelligent Vehicle Industry and Solutions

- 1.4.9 Existing Problems of AI Foundation Models

- 1.4.10 Impacts of Sora on Intelligent Vehicle Industry and Prediction

- 1.4.11 Enduring Problems in AI Computing Chip Design and Solutions

- 1.4.12 AI Foundation Model: New Breakthroughs in Human-Machine Fusion Decision & Control

- 1.4.13 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (1)

- 1.4.14 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (2)

- 1.4.15 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (3)

- 1.4.16 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (4)

- 1.4.17 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (5)

- 1.4.18 Summary of AI Foundation Models' Impacts on Vehicle Intelligence (6)

2 Impacts of AI Foundation Models on Vehicle Hardware Layer

- 2.1 Impacts of AI Foundation Models on Chip Design and Functions

- 2.1.1 Impact Trends of AI Foundation Models on Chips (1)

- 2.1.2 Impact Trends of AI Foundation Models on Chips (2)

- 2.1.3 Impact Trends of AI Foundation Models on Chips (3)

- 2.1.4 Changes LLM Makes to Intelligent Vehicle SoC Design Paradigm

- 2.1.5 Case 1

- 2.1.6 Case 2

- 2.1.7 NVIDIA's DRIVE Family Chips for Autonomous Driving

- 2.1.8 Case 3

- 2.1.9 Impacts of AI Foundation Models on Cockpit Chip Design and Planning

- 2.1.10 Case 4

- 2.2 Impacts of AI Foundation Models on ADAS Sensor and Perception System Development

- 2.2.1 Foundation Model-Driven: Evolution Trends of Perception Capability Fusion and Sharing

- 2.2.2 Case 5

- 2.2.3 Case 6

3 Impacts of AI Foundation Models on Automotive SOA/Operating System

- 3.1 Impacts of AI Foundation Models on SOA/EE Architecture

- 3.1.1 Driving Factors for EEA Evolution

- 3.1.2 AI Foundation Model's Requirements for Computing Power Also Drive EEA Evolution

- 3.1.3 Multimodal Foundation Model and EEA 3.0

- 3.1.4 Development Directions of SOA in Terms of Foundation Model Agent Technology

- 3.1.5 Case 1

- 3.2 Impacts of AI Foundation Models on OS Design and Development

- 3.2.1 How AI Foundation Model Affects OS (1)

- 3.2.2 How AI Foundation Model Affects OS (2)

- 3.2.3 How AI Foundation Model Affects OS (3)

- 3.2.4 Case 2

- 3.2.5 Case 3

- 3.2.6 Case 4

- 3.2.7 Case 5

- 3.2.8 Case 6

4 Impacts of AI Foundation Models on Automotive Data Closed Loop/Simulation System

- 4.1 Impacts of AI Foundation Models on Data Closed Loop

- 4.1.1 Data-driven Autonomous Driving System

- 4.1.2 Data-driven and Data Closed Loop

- 4.1.3 Application of Foundation Models in Intelligent Driving

- 4.1.4 Changan's Data Closed Loop

- 4.1.5 Dotrust Technologies' Cloud Data Closed Loop Solution SimCycle

- 4.1.6 Huawei's Pangu Model and Data Closed Loop

- 4.1.7 How Huawei Pangu Model Enables Autonomous Driving Development Platforms

- 4.1.8 SenseTime's Data Closed Loop Solution

- 4.1.9 Juefx Technology Uses Horizon Robotics' Chips and Foundation Model to Complete Data Closed Loop

- 4.2 Impacts of AI Foundation Models on Simulation System

- 4.2.1 Autonomous Driving Vision Foundation Model (VFM)

- 4.2.2 Comparative Analysis of Sora and Tesla FSD-GWM

- 4.2.3 Comparison between Sora and LLM

- 4.2.4 Comparison between Sora and ChatSim

- 4.2.5 Multimodal Basic Foundation Model

- 4.2.6 Generative World Model GAIA-1 System Architecture

- 4.2.7 Case 1

- 4.2.8 Case 2

- 4.2.9 Case 3

- 4.2.10 Case 4

5 Impacts of AI Foundation Models on Autonomous Driving/Intelligent Cockpit

- 5.1 Impacts of AI Foundation Models on Autonomous Driving

- 5.1.1 AD Foundation Model: Application Scenarios and Strategic Significance

- 5.1.2 AD Foundation Model: Typical Applications

- 5.1.3 AD Foundation Model: Typical Applications and Limitations

- 5.1.4 AD Foundation Model: Main Adaptation Scenarios and Application Modes

- 5.1.5 VLM/MLM/VFM: Industrial Adaptation Scenarios and Main Applications

- 5.1.6 AD Foundation Model: Adaptation Scenarios Case

- 5.1.7 AD Vision Foundation Model: Data Representation and Main Applications

- 5.1.8 Evolution Trends of Intelligent Driving Domain Controller

- 5.1.9 Application of Multimodal Foundation Model in Intelligent Driving

- 5.2 Application Cases of AI Foundation Model in Autonomous Driving

- 5.2.1 Case 1

- 5.2.2 Case 2

- 5.2.3 Case 3

- 5.2.4 SenseTime Drive-MLM: World Model Construction

- 5.2.5 SenseTime Drive-MLM: Multimodal Generative Interaction

- 5.2.6 Case 4

- 5.2.7 Case 5

- 5.2.8 Case 6

- 5.2.9 Qualcomm Hybrid AI: Application in Intelligent Driving

- 5.2.10 Qualcomm AI Model Library

- 5.2.11 Case 7

- 5.2.12 Case 8

- 5.3 Impacts of AI Foundation Models on Cockpit Domain Controller

- 5.3.1 Multimodal Foundation Model

- 5.3.2 Impacts of Foundation Models on Interaction Design: Data Analysis and Decision

- 5.3.3 Impacts of Foundation Models on Interaction Design: Personalization through Autonomous Learning

- 5.3.4 Case 1

- 5.3.5 Case 2

- 5.3.6 Case 3

- 5.3.7 Case 4

- 5.3.8 Case 5

6 AI Agent and Automobile

- 6.1 What is AI Agent

- 6.2 Development Directions of AI Agent

- 6.3 Application Trends of AI Agent for Intelligent Vehicles

- 6.4 Application Cases of AI Agent in Vehicles